Using Test Template

This is an example of using AlchemyJ testing framework to test a function point in AlchemyJ Studio and the generated Java API. If you need details on each settings in the test template, please read the Test Template page. API package needs to be generated by using Generate API function on the AlchemyJ ribbon. The service needs to be started for testing REST API. The test case preparation and execution are the same for Java API and REST API.

Sample Test Function

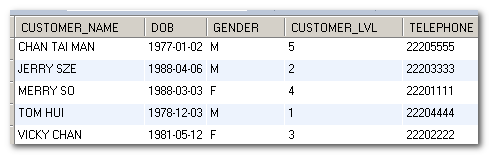

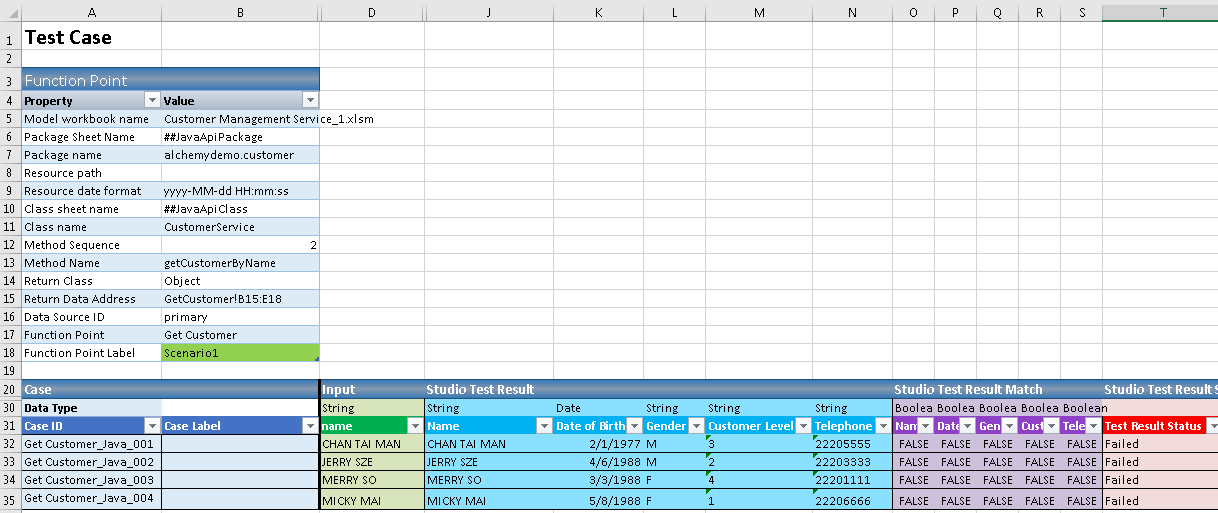

The sample function point is to get customer information from DB by keying in the Name. The test data in DB as shown below.

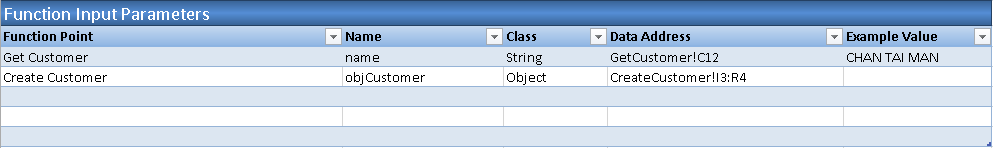

The input parameter is 'name' and the example value is 'CHAN TAI MAN' as defined in the Function Input Parameters section of the model workbook.

Create a Test Template

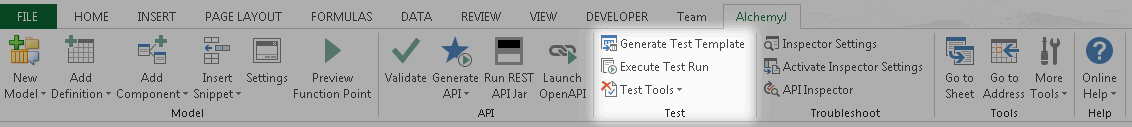

Open the model workbook, go to the AlchemyJ ribbon, click the Generate Test Template on Test tab.

AlchemyJ will prompt a window to select the function point you are going to test. Press 'Shift' to select multiple function points.

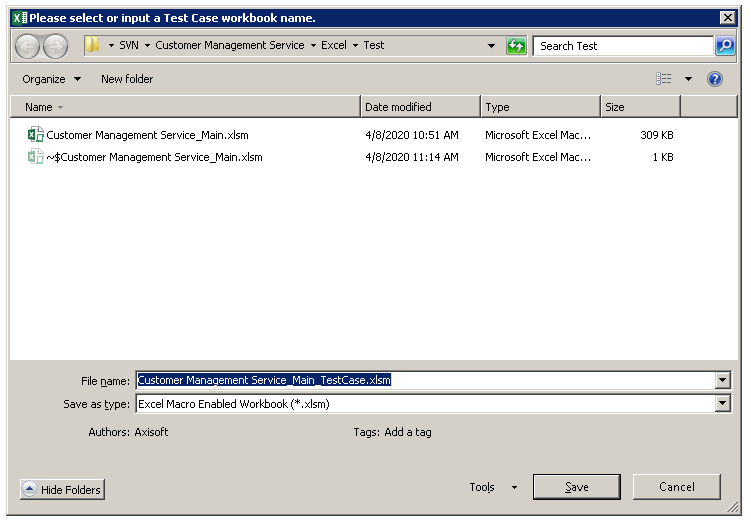

Specify the file name to save the test case template. You can also specify an existing testing file to save the test case template. When you choose an existing file to create the test case template, it will append the new test worksheets to the selected workbook only. It is recommended to save the test workbook in the same directory of the model workbook.

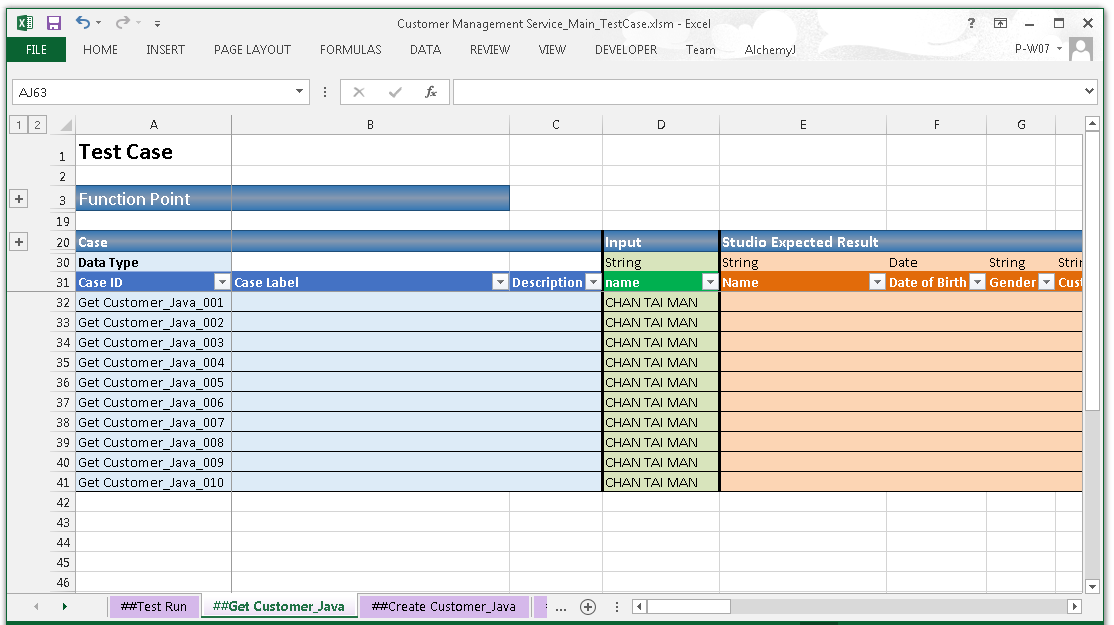

AlchemyJ will automatically create a test case worksheet for the selected function point. It will also add a ##Test Run worksheet if the workbook is newly created. The test case worksheet name is ##Function Point_Java or ##Function Point_REST which depends on the selected package. Since we selected Get Customer and Create Customer function points at Step 2, ##Get_Customer_Java and ##Create_Customer_Java test case worksheets are added in the test workbook.

10 set test cases will be generated in each test case worksheet. The input column has a default value which comes from the example value defined in the model workbook. The number of rows it contains depends on the range defined by the response data address. For example, if the response data address has 5 rows then each test case will have 5 rows for response data.

Update Test Cases

Since the default value is the same for all cases, we need to update the input according to the test scenarios. You can delete some test cases if the number of test cases is less then 10. Otherwise, all the blank test cases will be marked as 'Passed' and it could affect your analysis on the test execution summary. You can also click on the Test Tools - Append Test Cases on the Test tab to append more cases when needed.

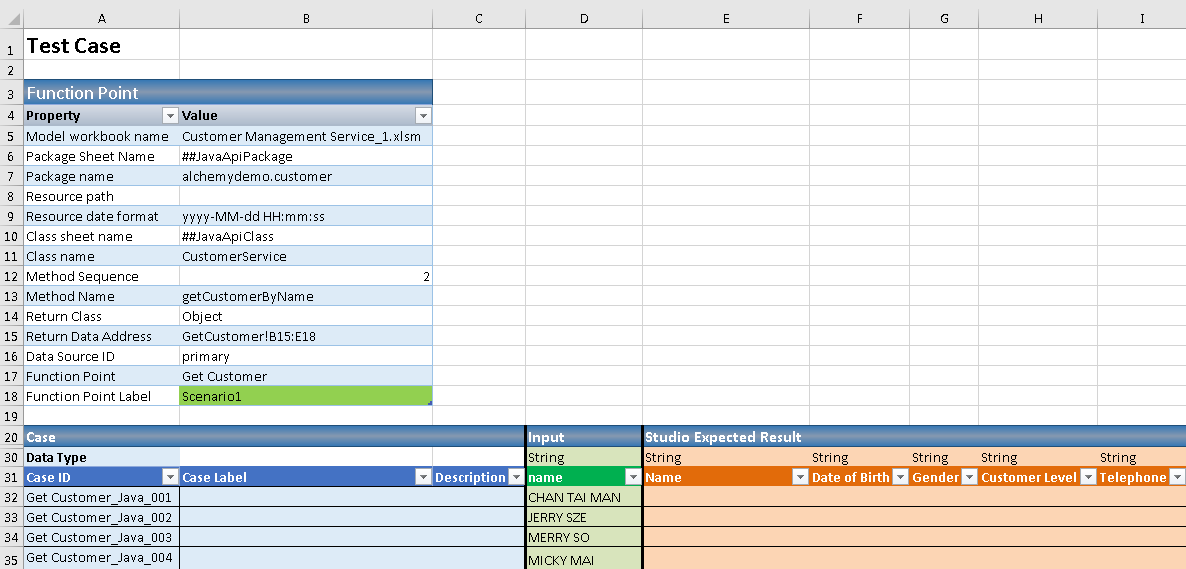

In this example, we add a function point label 'Scenario1', modify the test case input data, and remove case 5 to 10.

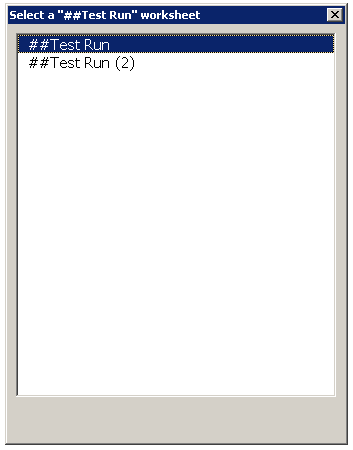

Now we can click Execute Test Run on the AlchemyJ ribbon to get the test result run on AlchemyJ Studio. All test cases on the test workbook will be executed on AlchemyJ Studio when nothing has been defined in the Test Case Criteria section of the Test Run worksheet for this case. When there are multiple test run worksheets, it will prompt a window to ask for the test run worksheet. Each execution can select 1 worksheet only.

The testing result will be put in the Studio Test Result section. You can see the execution result shows Failed for all cases. We can ignore this for now since we have not yet defined the expected result.

Now, you need to verify studio test result. Check and modify the model if the testing results are not as expected. It is recommended to regenerate the test case worksheet if there are some modifications to the model workbook. You also can use the quick function Test Tools - Load Test Case to Model to copy the test data to the model workbook to further check why the test result is not as expected.

If the studio results are as expected, click Test Tools - Copy Test Result to Empty Expected Result on the AlchemyJ ribbon. The content of the studio testing result will be copied to both Studio Expected Result and API Expected Result sections for the first time. If there are any changes on the test result and you wish to modify the expected result too, you can also use Test Tools - Copy Selected Result to Expected Result to update the expectation for the selected cases.

The Studio Test Result Match and API Test Result Match sections use formulas to check whether the test result equals to the expected result for each column. You can modify the formula as well if there are some columns that can be ignored or there are some special rules. For example, to ignore the value of a data creation date-time column since it is different on every run.

Done! Now, we have the expected results for both Studio and API.

Execute Test Case

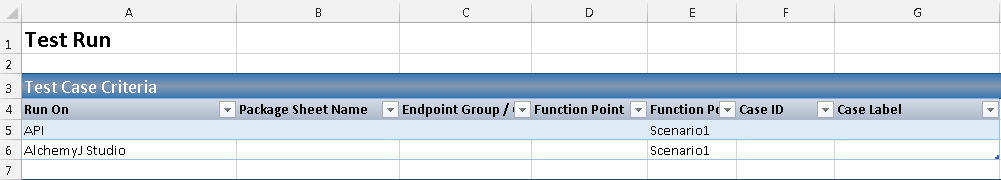

Go to ##Test Run Worksheet, input the test case criteria for both AlchemyJ Studio and API.

Input the Function Point Label as 'Scenario1', then all the cases that are just defined will run on both AlchemyJ Studio and API.

Go to the AlchemyJ ribbon and on the Test tab, click on Execute Test Run . The execution result will be shown in the Test Execution Summary section and the Test Result section.

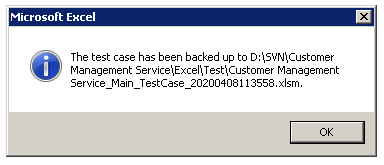

You can quickly create a copy of the current test template workbook by clicking Test Tools\Backup Test Template in the Test category. It saves a copy of the workbook in the same folder using the same file name with a suffix of the current time.